Abstract

Motivation. Synthetic image detectors (SIDs) are a key defense against the risks posed by the growing realism of images from text-to-image (T2I) models. Red teaming improves SID's effectiveness by identifying and exploiting their failure modes via misclassified synthetic images.

Research Gap. Existing red-teaming solutions

- (1) require white-box access to SIDs, which is infeasible for proprietary state-of-the-art detectors, and

- (2) generate image-specific attacks through expensive online optimization.

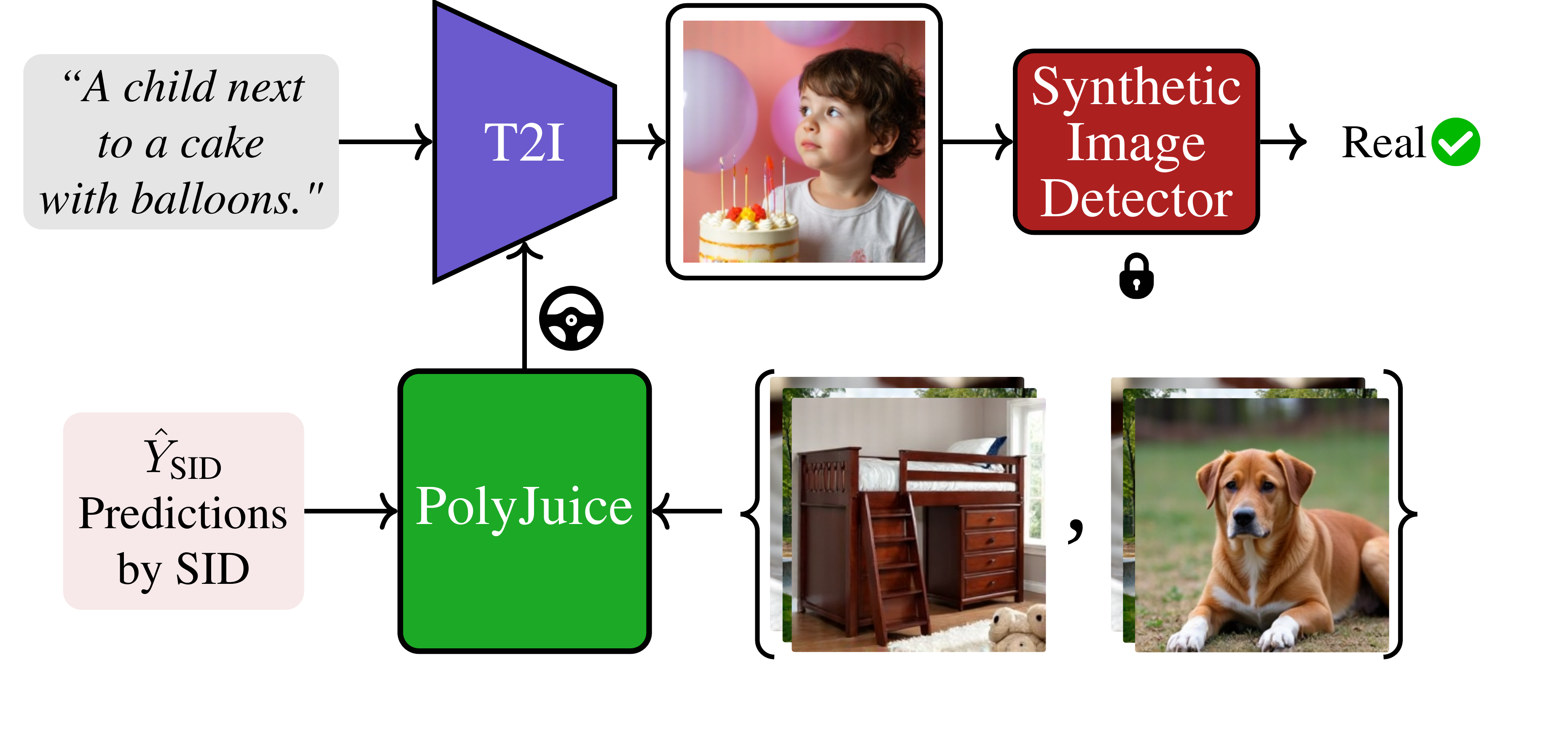

What we propose. To address these limitations, we propose PolyJuice, the first black-box, image- agnostic red-teaming method for SIDs, based on an observed distribution shift in the T2I latent space between samples correctly and incorrectly classified by the SID. PolyJuice generates attacks by (i) identifying the direction of this shift through a lightweight offline process that only requires black-box access to the SID, and (ii) exploiting this direction by universally steering all generated images towards the SID's failure modes.

Results at a glance. PolyJuice-steered T2I models are significantly more effective at deceiving SIDs (up to 84%) compared to their unsteered counterparts. We also show that the steering directions can be estimated efficiently at lower resolutions and transferred to higher resolutions using simple interpolation, reducing computational overhead. Finally, tuning SID models on PolyJuice-augmented datasets notably enhances the performance of the detectors (up to 30%).

Slides

Problem Setting

For a given T2I model and a target SID model, the goal is to generate images that can successfully deceive the SID.

PolyJuice steers the T2I generative process to discover images that successfully deceive the detector, as shown in the above figure.

PolyJuice Overview

Intuition

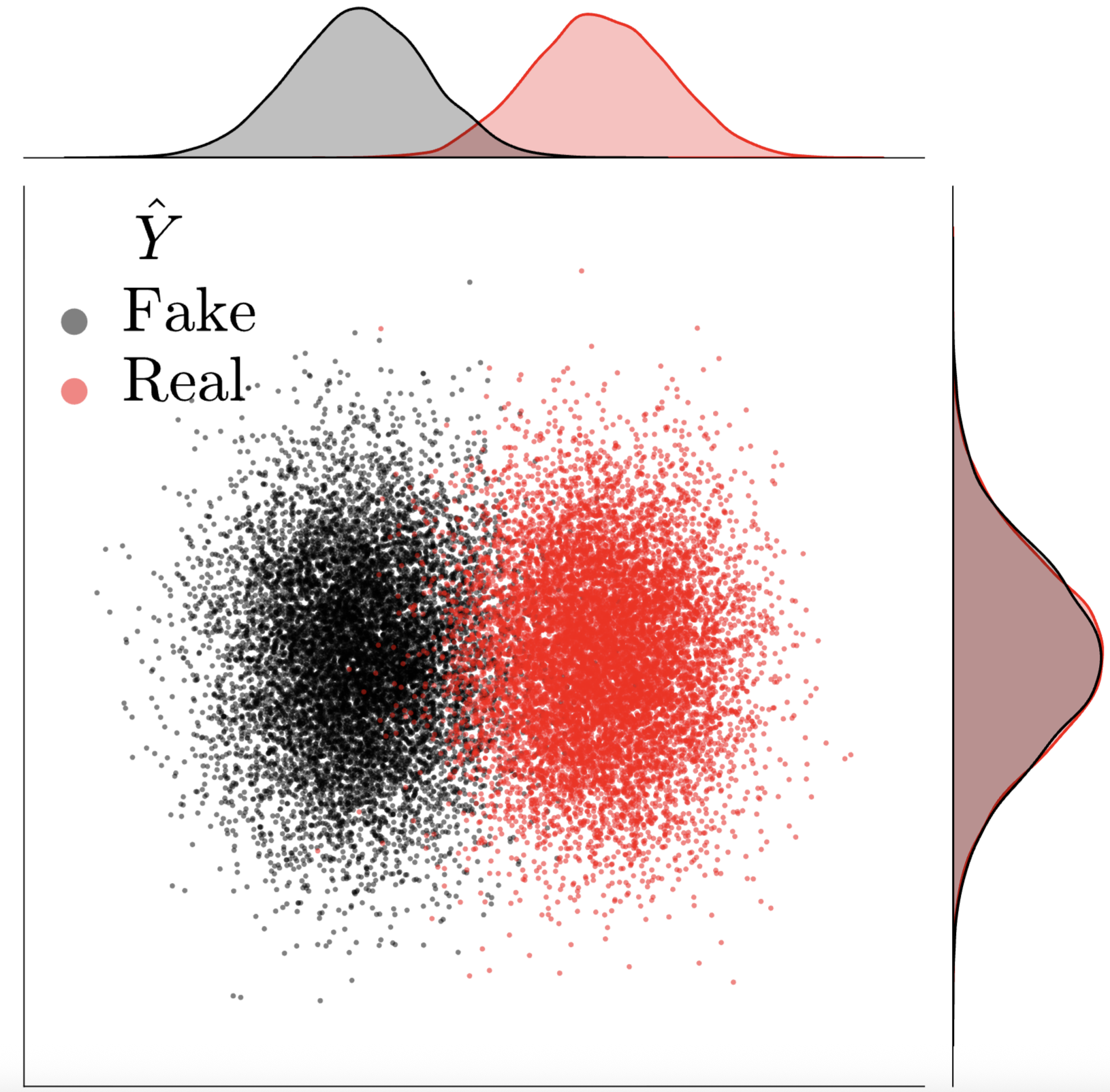

There exists a clearly observable shift between the distribution of the samples predicted as real versus those identified as fake, in the latent space of T2I models.

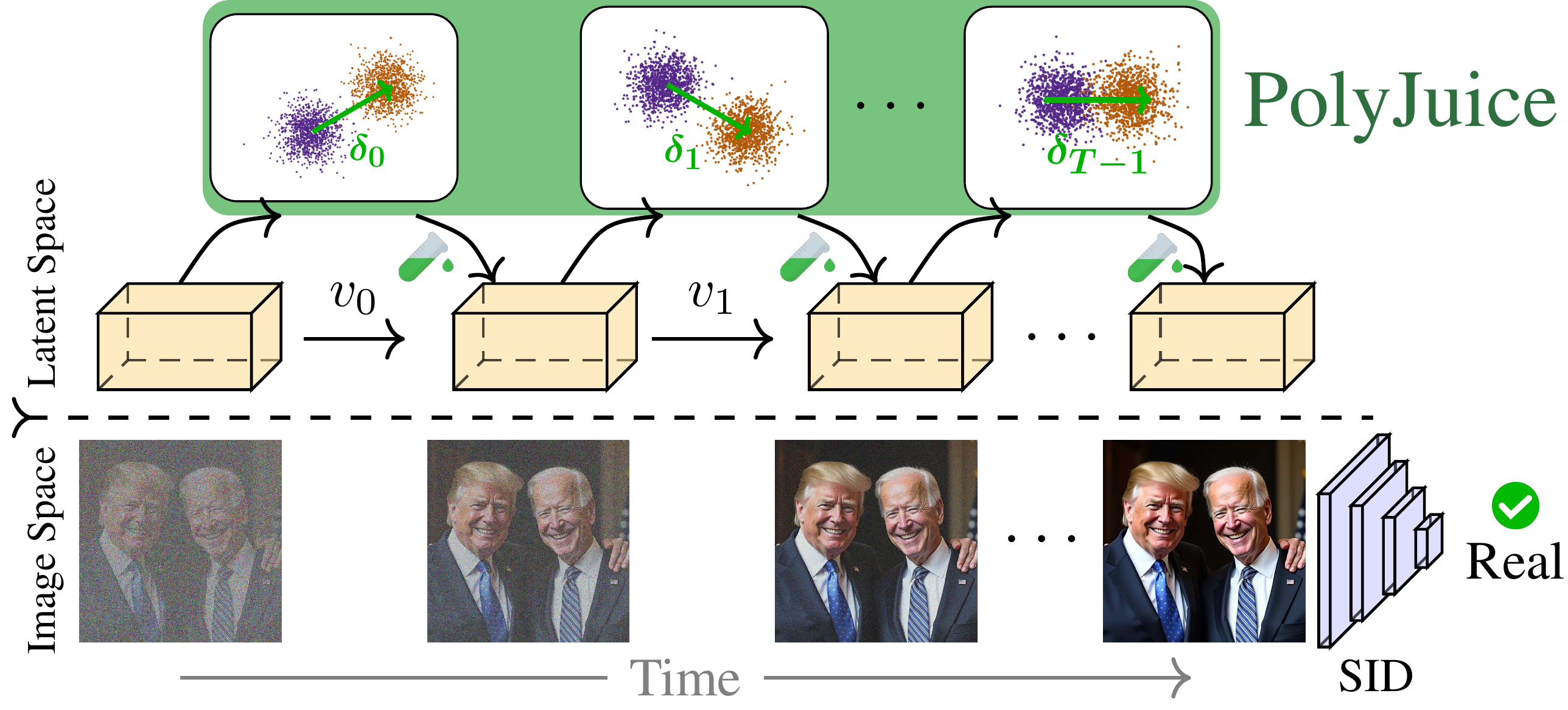

PolyJuice

At each inference step \(t\), PolyJuice manipulates the T2I latent

using pre-computed direction \(\delta_t\) between predicted real and fake in order to deceive the target SID.

How to find the steering directions?

PolyJuice identifies these steering directions from a set of generated images, by characterizing the subspace where the

statistical dependence between their T2I latents and their SID-predicted realness is maximal. Finding

these directions is motivated by the key observation that there exists a distribution shift between the

predicted real and fake samples in the T2I latent space.

How to improve the detectors?

The successful attack samples generated by PolyJuice help to improve the detector by finding its semantic and

non-semantic failure modes.

Steering Visualization

Estimated clean images at various timesteps for FLUX[dev], where bottom and top rows depict unsteered and PolyJuice-steered generation, respectively.

PolyJuice steers the latent towards the blind spot of the target SID (at the second image), resulting in a successful

attack (top) that slightly differs from the unsteered counterpart (bottom) while maintaining the semantics of the image.

Experimental Evaluation

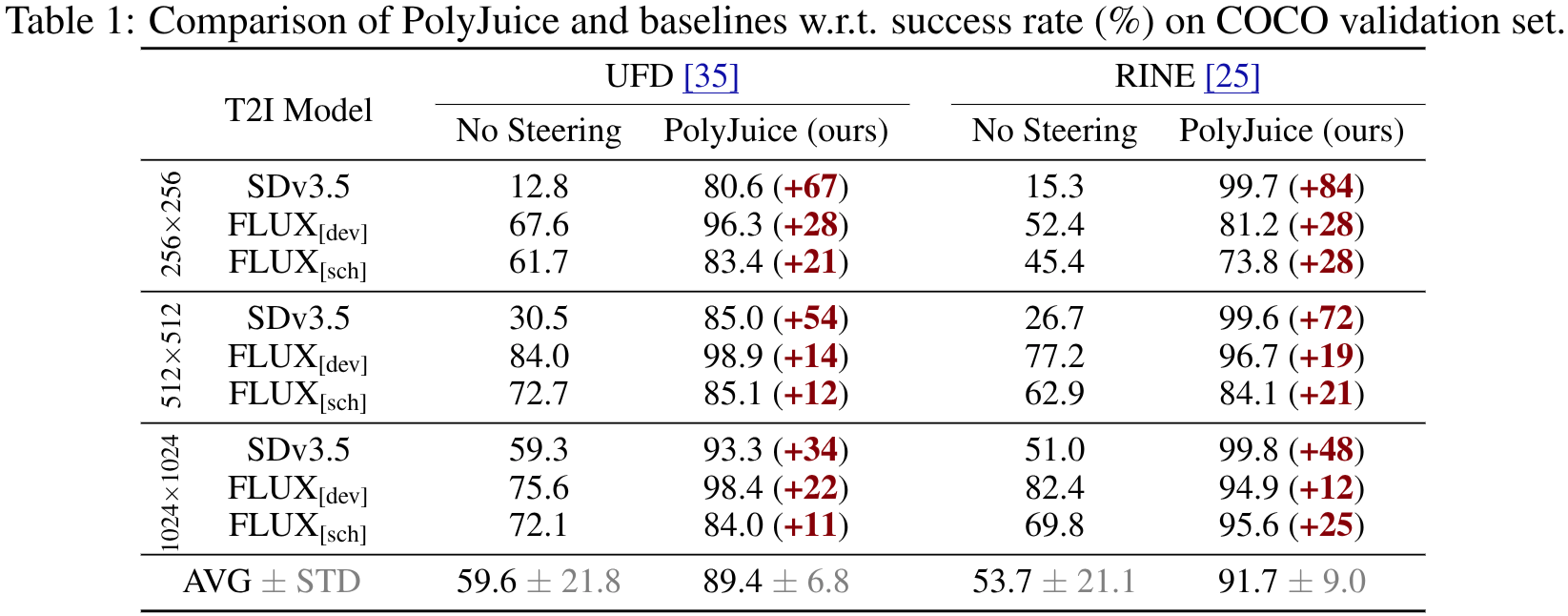

How Successful is PolyJuice in Attacking Synthetic Image Detectors?

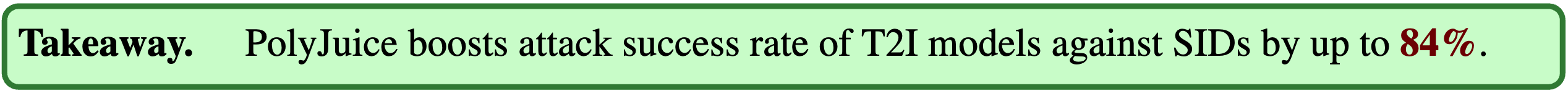

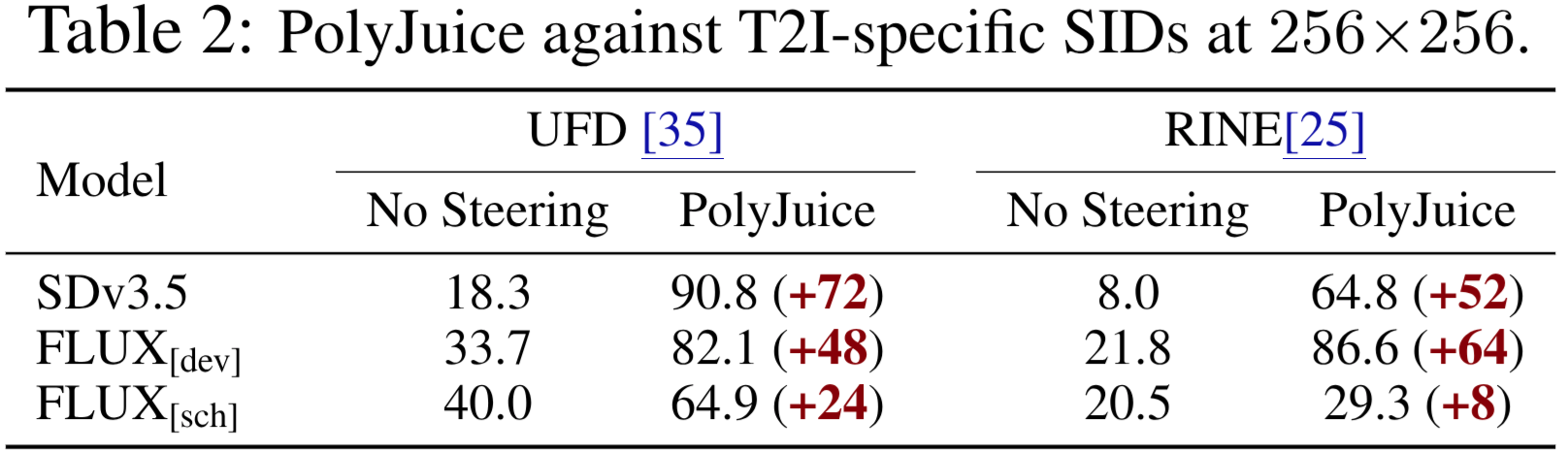

Tab. 1 compares PolyJuice-steered T2I models against unsteered baselines in terms of their ability to deceive SID models. We observe that, among the three unsteered T2I generators, SDv3.5 has the lowest success rate—suggesting that UFD and RINE are quite effective at detecting SDv3.5-generated fakes, while FLUX[dev] and FLUX[sch]-generated images are harder to detect. However, by steering the image generation process of each T2I model with PolyJuice, the success rate in deceiving the SID models is significantly boosted, even in the case of the easily-detected SDv3.5

How Effective is PolyJuice When Applied on a T2I Model-Specific Detector?

For each T2I model \(G\), we first calibrate the thresholds for both UFD and RINE using a set of real images from COCO and a set of generated images from \(G\). Tab. 2 demonstrates PolyJuice’s performance against the tuned SID models. Compared to Tab. 1, we observe that finding a new threshold significantly improves the detection performance in all cases except for UFD against SDv3.5. Both UFD and RINE significantly improve their detection of fake images from FLUX[dev] and FLUX[sch] under the new settings. In addition, RINE demonstrates an overall stronger detection capability compared to UFD. Against the improved detectors, PolyJuice still notably boosts the success rate, as much as \(72\%\) (in the case of SDv3.5).

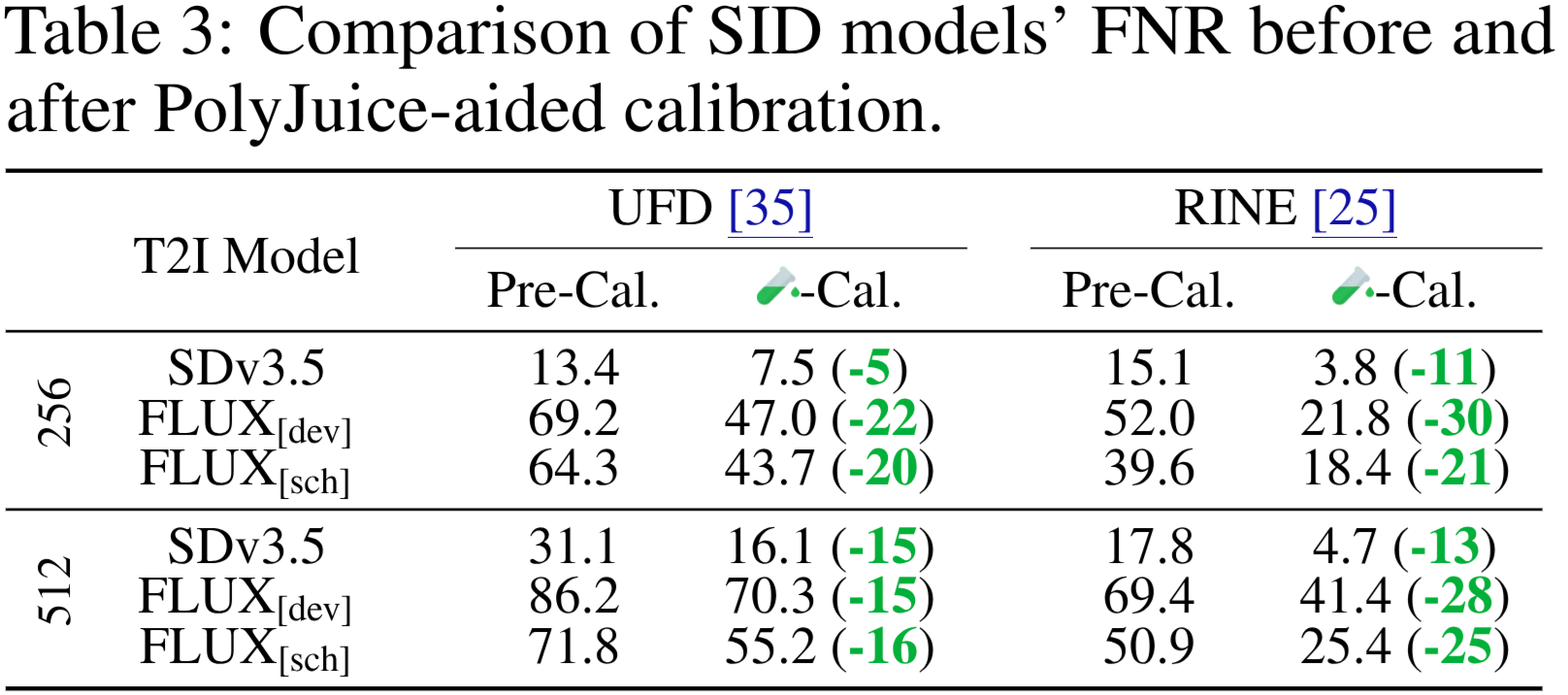

How Effective is PolyJuice in Reducing False Negative Rate of Existing SIDs?

To observe the effectiveness of PolyJuice in improving target SID models, we first attack the target detectors with PolyJuice-steered T2I models. Next, we calibrate each SID using a combination of (i) real images from COCO, (ii) regular synthetic images from a T2I model (i.e. unsteered T2I models), and (iii) PolyJuice-steered successful attack images.

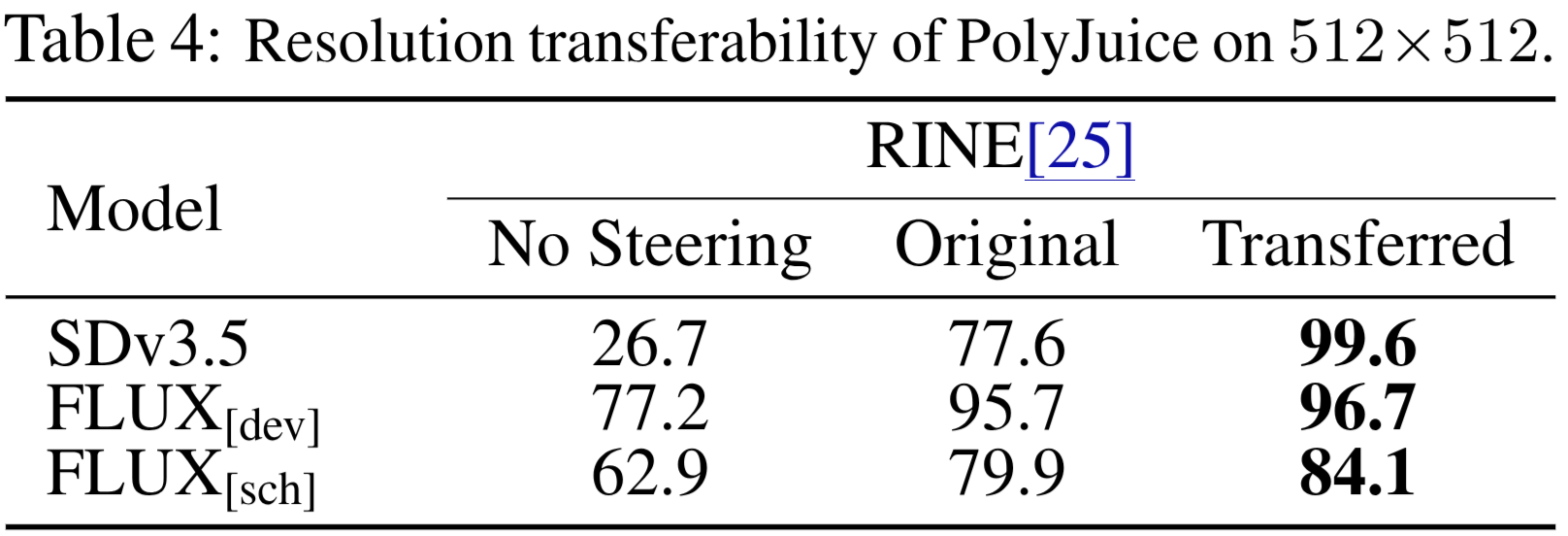

How Transferable are the Directions from Lower to Higher Resolutions?

We evaluate the transferability of low-resolution \(256\times256\) directions by comparing their attack performance against attacks generated by \(512\times512\) directions, as shown in Tab. 4. Both the transferred and original directions enable PolyJuice to significantly improve the FNR over the unsteered baseline. Further, PolyJuice attacks using transferred low-resolution directions achieve a comparable or higher FNR than attacks with original high-resolution directions. This result suggests that the error in SPCA-discovered directions is proportional to the dimensionality of its input space due to the curse of dimensionality

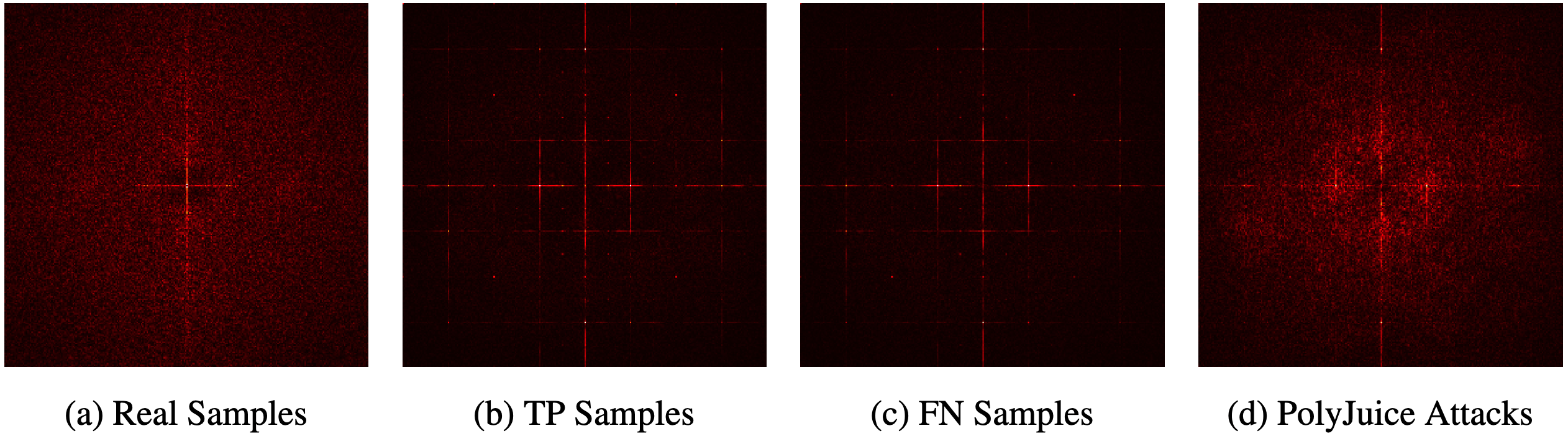

How Does PolyJuice Affect the Spectral Fingerprint of the T2I Models?

Prior work uncovers the existence of fingerprints left by T2I models through spectral analysis on the synthetic and real images. In the above figure, we present a similar approach on (a) the set of real images, (b) the SDv3.5-generated images correctly detected by UFD (TP), (c) generated images misclassified by UFD (FN), and (d) successful PolyJuice attacks. First, we observe that there is a clearly noticeable difference between the real images and the unsteered SDv3.5-generated images (TP + FN), which is the fingerprint of SDv3.5. However, it can be seen that PolyJuice obfuscates this fingerprint, as the residuals of PolyJuice-steered images (d) appear closer to the real image spectrum (a).

BibTeX

@inproceedings{

dehdashtian2025polyjuice,

title={PolyJuice Makes It Real: Black-Box, Universal Red-Teaming for Synthetic Image Detectors},

author={Dehdashtian, Sepehr and Morshed, Mashrur and Seidman, Jacob and Bharaj, Gaurav and Boddeti, Vishnu Naresh},

booktitle={Neural Information Processing Systems},

year={2025}

}